I’ve been running across this article all over Reddit for the past couple of days.

It’s driving me crazy.

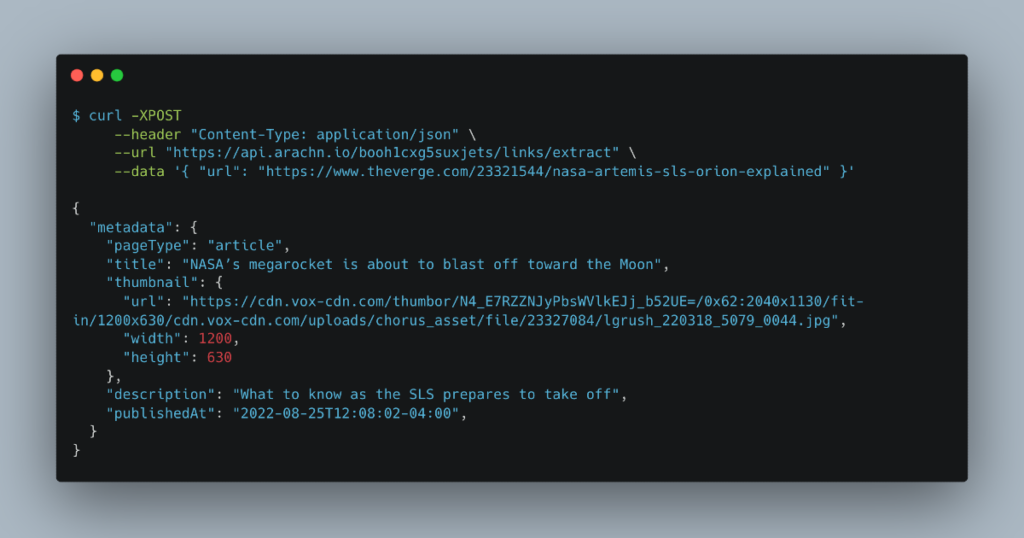

The article (rightfully) points out that Java’s humble String.hashCode() method — which maps arbitrary-length String objects to 32-bit int values — has collisions. The article also (wrongfully) makes this sound surprising, and claims that the String.hashCode() algorithm is bad on that basis. In the author’s own words:

No matter what hashing strategy is used, collisions are enevitable (sic) however some hashes are worse than others. You can expect String to be fairly poor.

That’s pretty strongly worded!

The author has demonstrated that String.hashCode() has collisions. However, being a 32-bit String hash function, String.hashCode() will by its nature have many collisions, so the existence of collisions alone doesn’t make it bad.

The author also demonstrated that there are many odd, short String values that generate String.hashCode() collisions. (The article gives many examples, such as !~ and "_.) But because String.hashCode()‘s hash space is small and it runs so fast, it will even be easy to find examples of collisions, so being able to find the collisions doesn’t make String.hashCode() bad either. (And hopefully no one expected it to be a cryptographic hash function to begin with!)

So none of these arguments that String.hashCode() is a “poor” hash function are convincing. Additionally, I have always found (anecdotally) that String.hashCode() manages collisions quite well for Real World Data.

So what does a “poor” hash function look like? And what does a “good” hash function look like? And where does String.hashCode() fall on that spectrum?

Continue reading “String.hashCode() is plenty unique”